World-Class Computing Performance in the Hands of Your Team

Your data science team depends on computing performance to gain insights and innovate faster through the power of AI and deep learning. Designed for your data science team, NVIDIA® DGX Station™ is the world’s fastest workstation for leading-edge AI development. DGX Station is the only workstation with four NVIDIA® Tesla® V100 Tensor Core GPUs, integrated with a fully connected four-way NVIDIA NVLink™ architecture. With 500 TFLOPS of supercomputing performance, your entire data science team can experience over 2X the training performance of today’s fastest workstations.

- 72X the performance for deep learning training, compared with

- CPU-based servers

- 100X speedup on large data set analysis, compared with a 20 node Spark

- server cluster

- 5X increase in bandwidth compared to PCIe with NVLink technology

- Maximized versatility with deep learning training and over 30,000 images per second inferencing

Experiment faster and iterate more frequently for effortless productivity.

| GPUs | 4X Tesla V100 |

| TFLOPS (Mixed precision) | 500 |

| GPU Memory | 128 GB total system |

| NVIDIA Tensor Cores | 2,560 |

| NVIDIA CUDA® Cores | 20,480 |

| CPU | Intel Xeon E5-2698 v4 2.2 GHz (20-Core) |

| System Memory | 256 GB RDIMM DDR4 |

| Storage Data: | 3X 1.92 TB SSD RAID 0 |

| OS: | 1X 1.92 TB SSD |

| Network | Dual 10GBASE-T (RJ45) |

| Display | 3X DisplayPort, 4K resolution |

| Additional Ports | 2x eSATA, 2x USB 3.1, 4x USB 3.0 |

| Acoustics | 35 dB |

| System Weight | 88 lbs / 40 kg |

| System Dimensions | 518 D x 256 W x 639 H (mm) |

| Maximum Power Requirements | 1,500 W |

| Operating Temperature Range | 10–30 °C |

| Software | Ubuntu Desktop Linux OS, Red Hat Enterprise Linux OS, DGX Recommended GPU Driver, CUDA Toolkit |

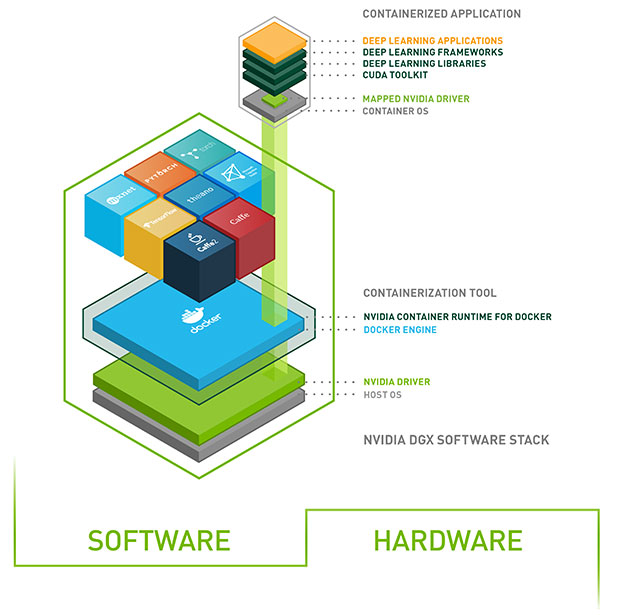

This integrated hardware and software solution allows your data science team to easily access a comprehensive catalog of NVIDIA optimized GPU-accelerated containers that offer the fastest possible performance for AI and data science workloads- provides access to fully optimized DGX Software Stack.

DGX1

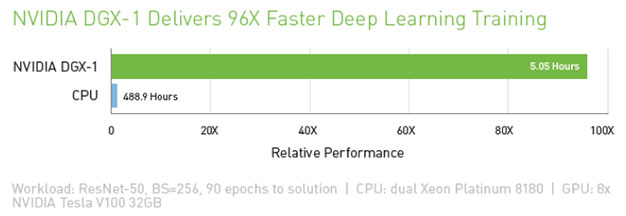

DGX-1 removes the burden of continually optimizing your deep learning software and delivers a ready-to-use, optimized software stack that can save you hundreds of thousands of dollars.

| GPUs | 8X NVIDIA Tesla V100 |

| Performance (Mixed Precicion) | 1 petaFLOPS |

| GPU Memory | 256 GB total system |

| CPU | Dual 20-Core Intel Xeon E5-2698 V4 2.2 GHz |

| NVIDIA CUDA® Cores | 40,960 |

| NVIDIA Tensor Cores (on Tesla V100 based systems) | 5,120 |

| Power Requirement | 3,500 W |

| System Memory | 512 MB 2,133 MHz DDR4 RDIMM |

| Storage Data: | 4X 1.92 TB SSD RAID 0 |

| Operating System: | Canonical Ubuntu, Red Hat Enterprise Linux |

| System Weight | 134 lbs |

| System Dimensions | 866 D * 444 w * 131 H (mm) |

| Packaging Dimensions | 1,180 D * 730 W * 284 H (mm) |

| Operating Temperature Range | 5-35 °C |

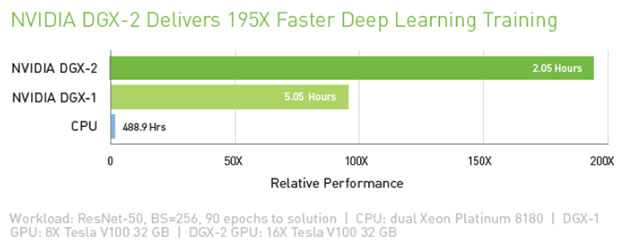

DGX2

| GPUs | 16X NVIDIA® Tesla® V100 |

| GPU Memory | 2 petaFLOPS |

| NVIDIA CUDA® Cores | 81920 |

| NVIDIA Tensor Cores | 10240 |

| NVSwitches | 12 |

| Maximum Power Usage | 10kW |

| CPU | Dual Intel Xeon Platinum 8168, 2.7 GHz, 24-cores |

| System Memory | 1.5TB |

| Network | 8X 100Gb/sec, Infiniband/100GigE, Dual 10/25/40/50/100GbE |

| Storage OS: | 2X 960GB NVME SSDs |

| Internal Storage: | 30TB (8X 3.84TB) NVME SSDs |

| Software | Ubuntu Linux OS, Red Hat Enterprise Linux OS (See software stack for details) |

| System Weight | 360 lbs (163.29 kgs) |

| Packaged System Weight | 400lbs (181.44kgs) |

| System Dimensions Height: | 17.3 in (440.0 mm), Width: 19.0 in (482.3 mm), Length: 31.3 in (795.4 mm) - No Front Bezel 32.8 in (834.0 mm) - With Front Bezel |

| Operating Temperature Range | 5°C to 35°C (41°F to 95°F) |